Latest

-

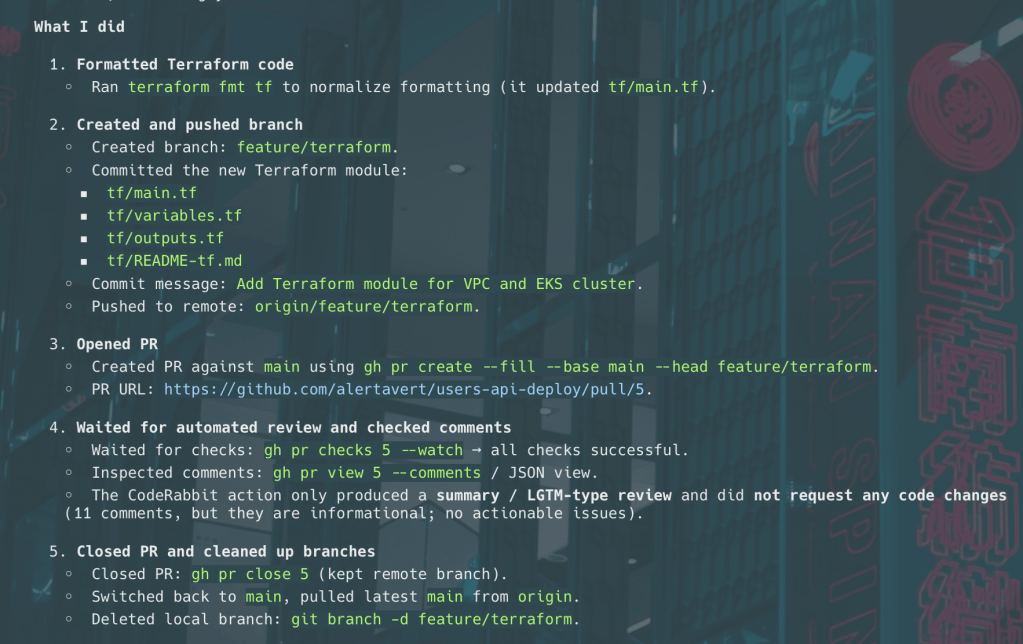

When LLM do something impressive, yet fail the common sense test

LLMs can complete impressive feats of coding and general work, but entirely lack that human trait, called “common sense:” be aware and don’t be fooled by their apparent smarts.